Model Card

Browse filesSome additional information for the model card, based on the format we are using as part of our effort to standardise model cards

Feel free to merge if you are ok with the changes! (cc @ Marissa @ Meg )

README.md

CHANGED

|

@@ -12,26 +12,67 @@ tags:

|

|

| 12 |

|

| 13 |

# German BERT

|

| 14 |

|

| 15 |

-

|

| 16 |

-

|

| 17 |

-

|

| 18 |

-

|

| 19 |

-

|

| 20 |

-

|

| 21 |

-

|

| 22 |

-

|

| 23 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 24 |

For details see the related [FARM issue](https://github.com/deepset-ai/FARM/issues/60). If you want to use the old vocab we have also uploaded a ["deepset/bert-base-german-cased-oldvocab"](https://huggingface.co/deepset/bert-base-german-cased-oldvocab) model.

|

| 25 |

|

| 26 |

-

|

|

|

|

| 27 |

- We trained using Google's Tensorflow code on a single cloud TPU v2 with standard settings.

|

| 28 |

- We trained 810k steps with a batch size of 1024 for sequence length 128 and 30k steps with sequence length 512. Training took about 9 days.

|

| 29 |

-

- As training data we used the latest German Wikipedia dump (6GB of raw txt files), the OpenLegalData dump (2.4 GB) and news articles (3.6 GB).

|

| 30 |

-

- We cleaned the data dumps with tailored scripts and segmented sentences with spacy v2.1. To create tensorflow records we used the recommended sentencepiece library for creating the word piece vocabulary and tensorflow scripts to convert the text to data usable by BERT.

|

| 31 |

-

|

| 32 |

|

| 33 |

See https://deepset.ai/german-bert for more details

|

| 34 |

|

|

|

|

| 35 |

## Hyperparameters

|

| 36 |

|

| 37 |

```

|

|

@@ -43,8 +84,12 @@ lr_schedule = LinearWarmup

|

|

| 43 |

num_warmup_steps = 10_000

|

| 44 |

```

|

| 45 |

|

| 46 |

-

##

|

| 47 |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 48 |

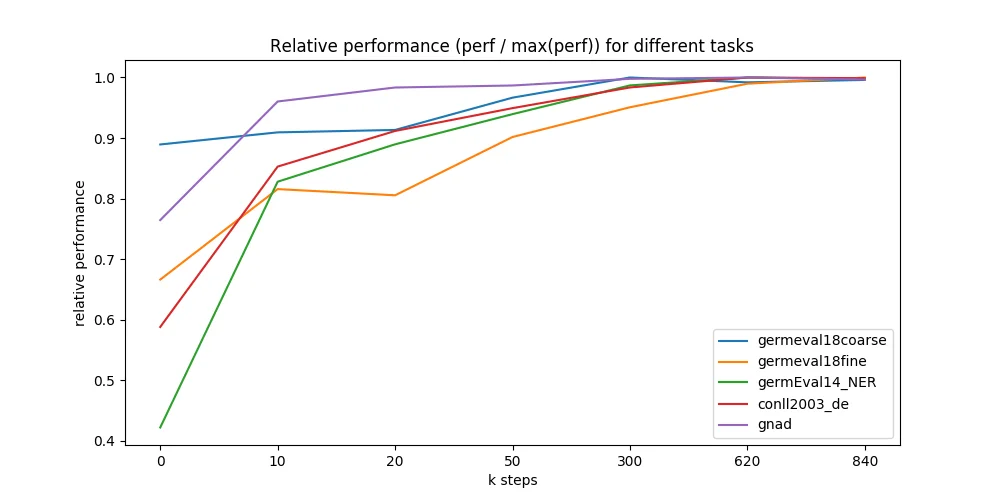

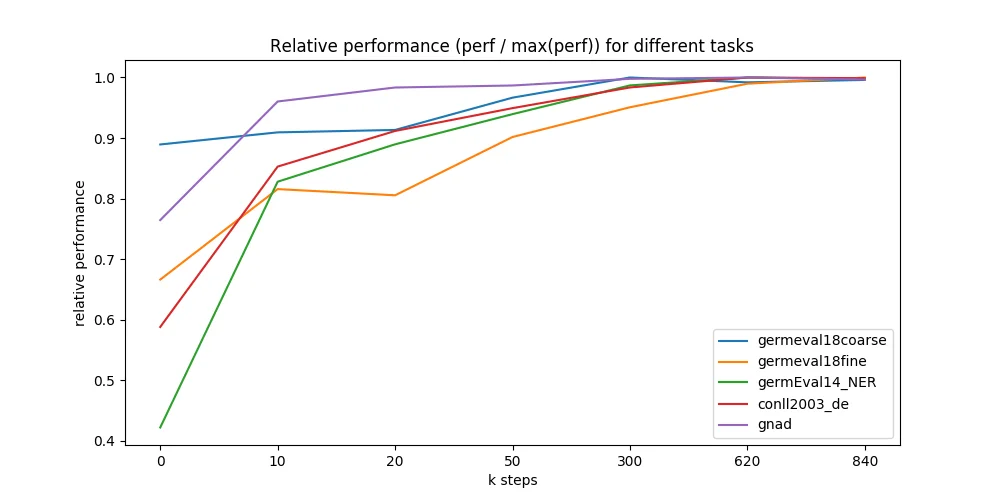

During training we monitored the loss and evaluated different model checkpoints on the following German datasets:

|

| 49 |

|

| 50 |

- germEval18Fine: Macro f1 score for multiclass sentiment classification

|

|

@@ -61,22 +106,49 @@ We further evaluated different points during the 9 days of pre-training and were

|

|

| 61 |

|

| 62 |

|

| 63 |

|

| 64 |

-

## Authors

|

| 65 |

-

- Branden Chan: `branden.chan [at] deepset.ai`

|

| 66 |

-

- Timo Möller: `timo.moeller [at] deepset.ai`

|

| 67 |

-

- Malte Pietsch: `malte.pietsch [at] deepset.ai`

|

| 68 |

-

- Tanay Soni: `tanay.soni [at] deepset.ai`

|

| 69 |

|

| 70 |

-

##

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 71 |

|

| 72 |

|

| 73 |

We bring NLP to the industry via open source!

|

| 74 |

Our focus: Industry specific language models & large scale QA systems.

|

| 75 |

-

|

| 76 |

-

Some of our work:

|

| 77 |

-

- [German BERT (aka "bert-base-german-cased")](https://deepset.ai/german-bert)

|

| 78 |

-

- [FARM](https://github.com/deepset-ai/FARM)

|

| 79 |

-

- [Haystack](https://github.com/deepset-ai/haystack/)

|

| 80 |

|

| 81 |

Get in touch:

|

| 82 |

-

[Twitter](https://twitter.com/deepset_ai) | [LinkedIn](https://www.linkedin.com/company/deepset-ai/) |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 12 |

|

| 13 |

# German BERT

|

| 14 |

|

| 15 |

+

|

| 16 |

+

## Table of Contents

|

| 17 |

+

- [Model Details](#model-details)

|

| 18 |

+

- [Uses](#uses)

|

| 19 |

+

- [Risks, Limitations and Biases](#risks-limitations-and-biases)

|

| 20 |

+

- [Training](#training)

|

| 21 |

+

- [Evaluation](#evaluation)

|

| 22 |

+

- [Environmental Impact](#environmental-impact)

|

| 23 |

+

- [Model Card Contact](#model-card-contact)

|

| 24 |

+

- [How to Get Started With the Model](#how-to-get-started-with-the-model)

|

| 25 |

+

|

| 26 |

+

## Model Details

|

| 27 |

+

- **Model Description:**

|

| 28 |

+

German BERT allows the developers working with text data in German to be more efficient with their natural language processing (NLP) tasks.

|

| 29 |

+

- **Developed by:**

|

| 30 |

+

- [Branden Chan](branden.chan@deepset.ai)

|

| 31 |

+

- [Timo Möller](timo.moeller@deepset.ai)

|

| 32 |

+

- [Malte Pietsch](malte.pietsch@deepset.ai)

|

| 33 |

+

- [Tanay Soni](tanay.soni@deepset.ai)

|

| 34 |

+

- **Model Type:** Fill-Mask

|

| 35 |

+

- **Language(s):** German

|

| 36 |

+

- **License:** MIT

|

| 37 |

+

- **Parent Model:** See the [BERT base cased model](https://huggingface.co/bert-base-cased) for more information about the BERT base model.

|

| 38 |

+

- **Resources for more information:**

|

| 39 |

+

- **Update October 2020:** [Research Paper](https://aclanthology.org/2020.coling-main.598/)

|

| 40 |

+

- [Website: German BERT](https://deepset.ai/german-bert)

|

| 41 |

+

- [GitRepo: FARM](https://github.com/deepset-ai/FARM)

|

| 42 |

+

- [Git Repo: Haystack](https://github.com/deepset-ai/haystack/)

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

## Uses

|

| 46 |

+

|

| 47 |

+

#### Direct Use

|

| 48 |

+

|

| 49 |

+

This model can be used for masked language modelling.

|

| 50 |

+

|

| 51 |

+

## Risks, Limitations and Biases

|

| 52 |

+

**CONTENT WARNING: Readers should be aware this section contains content that is disturbing, offensive, and can propagate historical and current stereotypes.**

|

| 53 |

+

|

| 54 |

+

Significant research has explored bias and fairness issues with language models (see, e.g., [Sheng et al. (2021)](https://aclanthology.org/2021.acl-long.330.pdf) and [Bender et al. (2021)](https://dl.acm.org/doi/pdf/10.1145/3442188.3445922)).

|

| 55 |

+

|

| 56 |

+

## Training

|

| 57 |

+

|

| 58 |

+

#### Training Data

|

| 59 |

+

**Training data:** Wiki, OpenLegalData, News (~ 12GB)

|

| 60 |

+

- As training data we used the latest German Wikipedia dump (6GB of raw txt files), the OpenLegalData dump (2.4 GB) and news articles (3.6 GB).

|

| 61 |

+

|

| 62 |

+

- The data dumps were cleaned with tailored scripts and segmented sentences with spacy v2.1. To create tensorflow records the model developers used the recommended *sentencepiece* library for creating the word piece vocabulary and tensorflow scripts to convert the text to data usable by BERT.

|

| 63 |

+

|

| 64 |

+

**Update April 3rd, 2020**: the model developers updated the vocabulary file on deepset's s3 to conform with the default tokenization of punctuation tokens.

|

| 65 |

+

|

| 66 |

For details see the related [FARM issue](https://github.com/deepset-ai/FARM/issues/60). If you want to use the old vocab we have also uploaded a ["deepset/bert-base-german-cased-oldvocab"](https://huggingface.co/deepset/bert-base-german-cased-oldvocab) model.

|

| 67 |

|

| 68 |

+

|

| 69 |

+

#### Training Procedure

|

| 70 |

- We trained using Google's Tensorflow code on a single cloud TPU v2 with standard settings.

|

| 71 |

- We trained 810k steps with a batch size of 1024 for sequence length 128 and 30k steps with sequence length 512. Training took about 9 days.

|

|

|

|

|

|

|

|

|

|

| 72 |

|

| 73 |

See https://deepset.ai/german-bert for more details

|

| 74 |

|

| 75 |

+

|

| 76 |

## Hyperparameters

|

| 77 |

|

| 78 |

```

|

|

|

|

| 84 |

num_warmup_steps = 10_000

|

| 85 |

```

|

| 86 |

|

| 87 |

+

## Evaluation

|

| 88 |

|

| 89 |

+

|

| 90 |

+

* **Eval data:** Conll03 (NER), GermEval14 (NER), GermEval18 (Classification), GNAD (Classification)

|

| 91 |

+

|

| 92 |

+

#### Performance

|

| 93 |

During training we monitored the loss and evaluated different model checkpoints on the following German datasets:

|

| 94 |

|

| 95 |

- germEval18Fine: Macro f1 score for multiclass sentiment classification

|

|

|

|

| 106 |

|

| 107 |

|

| 108 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 109 |

|

| 110 |

+

## Environmental Impact

|

| 111 |

+

|

| 112 |

+

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700). We present the hardware type based on the [associated paper](https://arxiv.org/pdf/2105.09680.pdf).

|

| 113 |

+

|

| 114 |

+

|

| 115 |

+

- **Hardware Type:** Tensorflow code on a single cloud TPU v2

|

| 116 |

+

|

| 117 |

+

- **Hours used:** 216 (9 days)

|

| 118 |

+

|

| 119 |

+

- **Cloud Provider:** GCP

|

| 120 |

+

|

| 121 |

+

- **Compute Region:** [More information needed]

|

| 122 |

+

|

| 123 |

+

- **Carbon Emitted:** [More information needed]

|

| 124 |

+

|

| 125 |

+

|

| 126 |

+

## Model Card Contact

|

| 127 |

+

|

| 128 |

+

<details>

|

| 129 |

+

<summary>Click to expand</summary>

|

| 130 |

+

|

| 131 |

+

|

| 132 |

|

| 133 |

|

| 134 |

We bring NLP to the industry via open source!

|

| 135 |

Our focus: Industry specific language models & large scale QA systems.

|

| 136 |

+

|

|

|

|

|

|

|

|

|

|

|

|

|

| 137 |

|

| 138 |

Get in touch:

|

| 139 |

+

[Twitter](https://twitter.com/deepset_ai) | [LinkedIn](https://www.linkedin.com/company/deepset-ai/) |

|

| 140 |

+

|

| 141 |

+

</details>

|

| 142 |

+

|

| 143 |

+

|

| 144 |

+

## How to Get Started With the Model

|

| 145 |

+

|

| 146 |

+

```python

|

| 147 |

+

from transformers import AutoTokenizer, AutoModelForMaskedLM

|

| 148 |

+

|

| 149 |

+

tokenizer = AutoTokenizer.from_pretrained("bert-base-german-cased")

|

| 150 |

+

|

| 151 |

+

model = AutoModelForMaskedLM.from_pretrained("bert-base-german-cased")

|

| 152 |

+

|

| 153 |

+

```

|

| 154 |

+

|