Spaces:

Running

Running

Alican Akca

commited on

Commit

•

1e4d453

1

Parent(s):

f05ac6e

issues...

Browse files- app.py +54 -0

- examples/GANexample1.ipynb +0 -0

- img/example_1.jpg +0 -0

- img/logo.jpg +0 -0

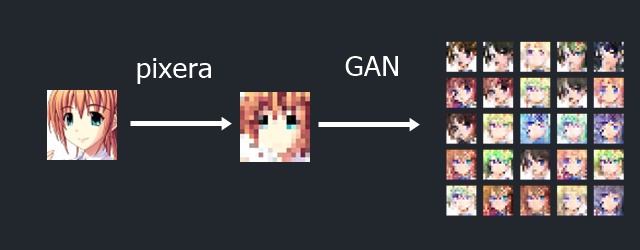

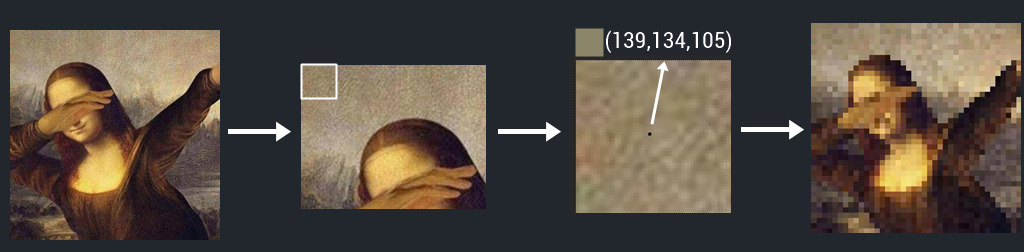

- img/method_1.png +0 -0

- methods/__pycache__/combine.cpython-38.pyc +0 -0

- methods/__pycache__/img2pixl.cpython-38.pyc +0 -0

- methods/__pycache__/instructor.cpython-38.pyc +0 -0

- methods/__pycache__/parse.cpython-38.pyc +0 -0

- methods/combine.py +29 -0

- methods/img2pixl.py +73 -0

- methods/secondMethod.py +12 -0

- methods/white_box_cartoonizer/__pycache__/cartoonize.cpython-37.pyc +0 -0

- methods/white_box_cartoonizer/__pycache__/cartoonize.cpython-38.pyc +0 -0

- methods/white_box_cartoonizer/__pycache__/guided_filter.cpython-37.pyc +0 -0

- methods/white_box_cartoonizer/__pycache__/network.cpython-37.pyc +0 -0

- methods/white_box_cartoonizer/cartoonize.py +83 -0

- methods/white_box_cartoonizer/components/__pycache__/guided_filter.cpython-38.pyc +0 -0

- methods/white_box_cartoonizer/components/__pycache__/network.cpython-38.pyc +0 -0

- methods/white_box_cartoonizer/components/guided_filter.py +73 -0

- methods/white_box_cartoonizer/components/network.py +72 -0

- methods/white_box_cartoonizer/saved_models/checkpoint +3 -0

- methods/white_box_cartoonizer/saved_models/model-33999.index +0 -0

- methods/white_box_cartoonizer/test.jpg +0 -0

- output/result_0.png +0 -0

- output/result_mask_0.png +0 -0

- requirements.txt +15 -0

- src/GAN.py +202 -0

app.py

ADDED

|

@@ -0,0 +1,54 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import cv2

|

| 2 |

+

import numpy as np

|

| 3 |

+

import gradio as gr

|

| 4 |

+

import paddlehub as hub

|

| 5 |

+

from methods.img2pixl import pixL

|

| 6 |

+

from methods.combine import combine

|

| 7 |

+

from methods.white_box_cartoonizer.cartoonize import WB_Cartoonize

|

| 8 |

+

model = hub.Module(name='U2Net')

|

| 9 |

+

pixl = pixL()

|

| 10 |

+

combine = combine()

|

| 11 |

+

|

| 12 |

+

def func_tab1(image,pixel_size, checkbox1):

|

| 13 |

+

image = cv2.imread(image.name)

|

| 14 |

+

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

|

| 15 |

+

image = WB_Cartoonize().infer(image)

|

| 16 |

+

image = np.array(image)

|

| 17 |

+

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

|

| 18 |

+

if checkbox1:

|

| 19 |

+

result = model.Segmentation(

|

| 20 |

+

images=[image],

|

| 21 |

+

paths=None,

|

| 22 |

+

batch_size=1,

|

| 23 |

+

input_size=320,

|

| 24 |

+

output_dir='output',

|

| 25 |

+

visualization=True)

|

| 26 |

+

result = combine.combiner(images = pixl.toThePixL([result[0]['front'][:,:,::-1], result[0]['mask']],

|

| 27 |

+

pixel_size),

|

| 28 |

+

background_image = image)

|

| 29 |

+

else:

|

| 30 |

+

images=images

|

| 31 |

+

result = pixl.toThePixL(images, pixel_size)

|

| 32 |

+

return result

|

| 33 |

+

|

| 34 |

+

def func_tab2():

|

| 35 |

+

pass

|

| 36 |

+

|

| 37 |

+

inputs_tab1 = [gr.inputs.Image(type='file', label="Image"),

|

| 38 |

+

gr.Slider(4, 100, value=12, step = 2, label="Pixel Size"),

|

| 39 |

+

gr.Checkbox(label="Object-Oriented Inference", value=False)]

|

| 40 |

+

outputs_tab1 = [gr.Image(type="numpy",label="Front")]

|

| 41 |

+

|

| 42 |

+

inputs_tab2 = [gr.Video()]

|

| 43 |

+

outputs_tab2 = [gr.Video()]

|

| 44 |

+

|

| 45 |

+

tab1 = gr.Interface(fn = func_tab1,

|

| 46 |

+

inputs = inputs_tab1,

|

| 47 |

+

outputs = outputs_tab1)

|

| 48 |

+

#Pixera for Videos

|

| 49 |

+

tab2 = gr.Interface(fn = func_tab2,

|

| 50 |

+

inputs = inputs_tab2,

|

| 51 |

+

outputs = outputs_tab2)

|

| 52 |

+

|

| 53 |

+

gr.TabbedInterface([tab1], ["Pixera for Images"]).launch()

|

| 54 |

+

|

examples/GANexample1.ipynb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

img/example_1.jpg

ADDED

|

img/logo.jpg

ADDED

|

img/method_1.png

ADDED

|

methods/__pycache__/combine.cpython-38.pyc

ADDED

|

Binary file (1.25 kB). View file

|

|

|

methods/__pycache__/img2pixl.cpython-38.pyc

ADDED

|

Binary file (2.37 kB). View file

|

|

|

methods/__pycache__/instructor.cpython-38.pyc

ADDED

|

Binary file (1.33 kB). View file

|

|

|

methods/__pycache__/parse.cpython-38.pyc

ADDED

|

Binary file (1.32 kB). View file

|

|

|

methods/combine.py

ADDED

|

@@ -0,0 +1,29 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import cv2

|

| 2 |

+

import numpy as np

|

| 3 |

+

|

| 4 |

+

class combine:

|

| 5 |

+

#Author: Alican Akca

|

| 6 |

+

def __init__(self, size = (400,300),images = [],background_image = None):

|

| 7 |

+

self.size = size

|

| 8 |

+

self.images = images

|

| 9 |

+

self.background_image = background_image

|

| 10 |

+

|

| 11 |

+

def combiner(self,images,background_image):

|

| 12 |

+

original = images[0]

|

| 13 |

+

masked = images[1]

|

| 14 |

+

background = cv2.resize(background_image,(images[0].shape[1],images[0].shape[0]))

|

| 15 |

+

result = blend_images_using_mask(original, background, masked)

|

| 16 |

+

return result

|

| 17 |

+

|

| 18 |

+

def mix_pixel(pix_1, pix_2, perc):

|

| 19 |

+

|

| 20 |

+

return (perc/255 * pix_1) + ((255 - perc)/255 * pix_2)

|

| 21 |

+

|

| 22 |

+

def blend_images_using_mask(img_orig, img_for_overlay, img_mask):

|

| 23 |

+

|

| 24 |

+

if len(img_mask.shape) != 3:

|

| 25 |

+

img_mask = cv2.cvtColor(img_mask, cv2.COLOR_GRAY2BGR)

|

| 26 |

+

|

| 27 |

+

img_res = mix_pixel(img_orig, img_for_overlay, img_mask)

|

| 28 |

+

|

| 29 |

+

return cv2.cvtColor(img_res.astype(np.uint8), cv2.COLOR_BGR2RGB)

|

methods/img2pixl.py

ADDED

|

@@ -0,0 +1,73 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import cv2

|

| 2 |

+

import numpy as np

|

| 3 |

+

from PIL import Image

|

| 4 |

+

|

| 5 |

+

class pixL:

|

| 6 |

+

#Author: Alican Akca

|

| 7 |

+

def __init__(self,numOfSquaresW = None, numOfSquaresH= None, size = [False, (512,512)],square = 6,ImgH = None,ImgW = None,images = [],background_image = None):

|

| 8 |

+

self.images = images

|

| 9 |

+

self.size = size

|

| 10 |

+

self.background_image = background_image

|

| 11 |

+

self.ImgH = ImgH

|

| 12 |

+

self.ImgW = ImgW

|

| 13 |

+

self.square = square

|

| 14 |

+

self.numOfSquaresW = numOfSquaresW

|

| 15 |

+

self.numOfSquaresH = numOfSquaresH

|

| 16 |

+

|

| 17 |

+

def preprocess(self):

|

| 18 |

+

for image in self.images:

|

| 19 |

+

|

| 20 |

+

size = (image.shape[0] - (image.shape[0] % 4), image.shape[1] - (image.shape[1] % 4))

|

| 21 |

+

image = cv2.resize(image, size)

|

| 22 |

+

image = cv2.cvtColor(image.astype(np.uint8), cv2.COLOR_BGR2RGB)

|

| 23 |

+

|

| 24 |

+

if len(self.images) == 1:

|

| 25 |

+

return self.images[0]

|

| 26 |

+

else:

|

| 27 |

+

return self.images

|

| 28 |

+

|

| 29 |

+

def toThePixL(self,images, pixel_size, background_image ):

|

| 30 |

+

self.background_image = background_image

|

| 31 |

+

self.images = []

|

| 32 |

+

self.square = pixel_size

|

| 33 |

+

for image in images:

|

| 34 |

+

image = Image.fromarray(image)

|

| 35 |

+

image = image.convert("RGB")

|

| 36 |

+

self.ImgW, self.ImgH = image.size

|

| 37 |

+

self.images.append(pixL.epicAlgorithm(self, image))

|

| 38 |

+

|

| 39 |

+

return pixL.preprocess(self)

|

| 40 |

+

|

| 41 |

+

def numOfSquaresFunc(self):

|

| 42 |

+

self.numOfSquaresW = round((self.ImgW / self.square) + 1)

|

| 43 |

+

self.numOfSquaresH = round((self.ImgH / self.square) + 1)

|

| 44 |

+

|

| 45 |

+

def epicAlgorithm(self, image):

|

| 46 |

+

pixValues = []

|

| 47 |

+

pixL.numOfSquaresFunc(self)

|

| 48 |

+

|

| 49 |

+

for j in range(1,self.numOfSquaresH):

|

| 50 |

+

|

| 51 |

+

for i in range(1,self.numOfSquaresW):

|

| 52 |

+

|

| 53 |

+

pixValues.append((image.getpixel((

|

| 54 |

+

i * self.square - self.square//2,

|

| 55 |

+

j * self.square - self.square//2)),

|

| 56 |

+

(i * self.square - self.square//2,

|

| 57 |

+

j * self.square - self.square//2)))

|

| 58 |

+

|

| 59 |

+

background = 255 * np.ones(shape=[self.ImgH - self.square,

|

| 60 |

+

self.ImgW - self.square*2, 3],

|

| 61 |

+

dtype=np.uint8)

|

| 62 |

+

|

| 63 |

+

for pen in range(len(pixValues)):

|

| 64 |

+

|

| 65 |

+

cv2.rectangle(background,

|

| 66 |

+

pt1=(pixValues[pen][1][0] - self.square,pixValues[pen][1][1] - self.square),

|

| 67 |

+

pt2=(pixValues[pen][1][0] + self.square,pixValues[pen][1][1] + self.square),

|

| 68 |

+

color=(pixValues[pen][0][2],pixValues[pen][0][1],pixValues[pen][0][0]),

|

| 69 |

+

thickness=-1)

|

| 70 |

+

background = np.array(self.background_image).astype(np.uint8)

|

| 71 |

+

background = cv2.resize(background, (self.ImgW,self.ImgH), interpolation = cv2.INTER_AREA)

|

| 72 |

+

|

| 73 |

+

return background

|

methods/secondMethod.py

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import numpy as np

|

| 2 |

+

import cv2 as cv

|

| 3 |

+

from matplotlib import pyplot as plt

|

| 4 |

+

import os

|

| 5 |

+

|

| 6 |

+

os.chdir("C:\\Users\Alican Akca\\OneDrive - Izmir Universtiy of Economics\\Belgeler\\GitHub\\pixera")

|

| 7 |

+

img = cv.imread(f'{os.getcwd()}\original\1.jpg',0)

|

| 8 |

+

edges = cv.Canny(img,100,200)

|

| 9 |

+

|

| 10 |

+

plt.subplot(122),plt.imshow(edges,cmap = 'gray')

|

| 11 |

+

plt.title('Edge Image'), plt.xticks([]), plt.yticks([])

|

| 12 |

+

plt.show()

|

methods/white_box_cartoonizer/__pycache__/cartoonize.cpython-37.pyc

ADDED

|

Binary file (4.2 kB). View file

|

|

|

methods/white_box_cartoonizer/__pycache__/cartoonize.cpython-38.pyc

ADDED

|

Binary file (3.18 kB). View file

|

|

|

methods/white_box_cartoonizer/__pycache__/guided_filter.cpython-37.pyc

ADDED

|

Binary file (2.52 kB). View file

|

|

|

methods/white_box_cartoonizer/__pycache__/network.cpython-37.pyc

ADDED

|

Binary file (1.9 kB). View file

|

|

|

methods/white_box_cartoonizer/cartoonize.py

ADDED

|

@@ -0,0 +1,83 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

Internal code snippets were obtained from https://github.com/SystemErrorWang/White-box-Cartoonization/

|

| 3 |

+

|

| 4 |

+

For it to work tensorflow version 2.x changes were obtained from https://github.com/steubk/White-box-Cartoonization

|

| 5 |

+

"""

|

| 6 |

+

import os

|

| 7 |

+

import uuid

|

| 8 |

+

import time

|

| 9 |

+

import subprocess

|

| 10 |

+

import sys

|

| 11 |

+

|

| 12 |

+

import cv2

|

| 13 |

+

import numpy as np

|

| 14 |

+

|

| 15 |

+

try:

|

| 16 |

+

import tensorflow.compat.v1 as tf

|

| 17 |

+

except ImportError:

|

| 18 |

+

import tensorflow as tf

|

| 19 |

+

|

| 20 |

+

from methods.white_box_cartoonizer.components.guided_filter import gf

|

| 21 |

+

from methods.white_box_cartoonizer.components.network import nk

|

| 22 |

+

|

| 23 |

+

weights_dir = f'{os.getcwd()}/methods/white_box_cartoonizer/saved_models'

|

| 24 |

+

gpu = len(sys.argv) < 2 or sys.argv[1] != '--cpu'

|

| 25 |

+

|

| 26 |

+

class WB_Cartoonize:

|

| 27 |

+

def __init__(self):

|

| 28 |

+

if not os.path.exists(weights_dir):

|

| 29 |

+

raise FileNotFoundError("Weights Directory not found, check path")

|

| 30 |

+

|

| 31 |

+

def resize_crop(self, image):

|

| 32 |

+

h, w, c = np.shape(image)

|

| 33 |

+

if min(h, w) > 720:

|

| 34 |

+

if h > w:

|

| 35 |

+

h, w = int(720*h/w), 720

|

| 36 |

+

else:

|

| 37 |

+

h, w = 720, int(720*w/h)

|

| 38 |

+

image = cv2.resize(image, (w, h),

|

| 39 |

+

interpolation=cv2.INTER_AREA)

|

| 40 |

+

h, w = (h//8)*8, (w//8)*8

|

| 41 |

+

image = image[:h, :w, :]

|

| 42 |

+

return image

|

| 43 |

+

|

| 44 |

+

def load_model(self, weights_dir, gpu):

|

| 45 |

+

try:

|

| 46 |

+

tf.disable_eager_execution()

|

| 47 |

+

except:

|

| 48 |

+

None

|

| 49 |

+

|

| 50 |

+

tf.reset_default_graph()

|

| 51 |

+

|

| 52 |

+

self.input_photo = tf.placeholder(tf.float32, [1, None, None, 3], name='input_image')

|

| 53 |

+

network_out = nk.unet_generator(self.input_photo)

|

| 54 |

+

self.final_out = gf.guided_filter(self.input_photo, network_out, r=1, eps=5e-3)

|

| 55 |

+

|

| 56 |

+

all_vars = tf.trainable_variables()

|

| 57 |

+

gene_vars = [var for var in all_vars if 'generator' in var.name]

|

| 58 |

+

saver = tf.train.Saver(var_list=gene_vars)

|

| 59 |

+

|

| 60 |

+

if gpu:

|

| 61 |

+

gpu_options = tf.GPUOptions(allow_growth=True)

|

| 62 |

+

device_count = {'GPU':1}

|

| 63 |

+

else:

|

| 64 |

+

gpu_options = None

|

| 65 |

+

device_count = {'GPU':0}

|

| 66 |

+

|

| 67 |

+

config = tf.ConfigProto(gpu_options=gpu_options, device_count=device_count)

|

| 68 |

+

|

| 69 |

+

self.sess = tf.Session(config=config)

|

| 70 |

+

|

| 71 |

+

self.sess.run(tf.global_variables_initializer())

|

| 72 |

+

saver.restore(self.sess, tf.train.latest_checkpoint(weights_dir))

|

| 73 |

+

|

| 74 |

+

def infer(self, image):

|

| 75 |

+

self.input_photo = image

|

| 76 |

+

self.load_model(weights_dir, gpu)

|

| 77 |

+

image = self.resize_crop(image)

|

| 78 |

+

batch_image = image.astype(np.float32)/127.5 - 1

|

| 79 |

+

batch_image = np.expand_dims(batch_image, axis=0)

|

| 80 |

+

output = self.sess.run(self.final_out, feed_dict={self.input_photo: batch_image})

|

| 81 |

+

output = (np.squeeze(output)+1)*127.5

|

| 82 |

+

output = np.clip(output, 0, 255).astype(np.uint8)

|

| 83 |

+

return output

|

methods/white_box_cartoonizer/components/__pycache__/guided_filter.cpython-38.pyc

ADDED

|

Binary file (2.18 kB). View file

|

|

|

methods/white_box_cartoonizer/components/__pycache__/network.cpython-38.pyc

ADDED

|

Binary file (2.16 kB). View file

|

|

|

methods/white_box_cartoonizer/components/guided_filter.py

ADDED

|

@@ -0,0 +1,73 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

Code copyrights are with: https://github.com/SystemErrorWang/White-box-Cartoonization/

|

| 3 |

+

|

| 4 |

+

To adapt the code with tensorflow v2 changes obtained from: https://github.com/steubk/White-box-Cartoonization

|

| 5 |

+

"""

|

| 6 |

+

try:

|

| 7 |

+

import tensorflow.compat.v1 as tf

|

| 8 |

+

except ImportError:

|

| 9 |

+

import tensorflow as tf

|

| 10 |

+

|

| 11 |

+

import numpy as np

|

| 12 |

+

|

| 13 |

+

class gf:

|

| 14 |

+

def tf_box_filter(x, r):

|

| 15 |

+

k_size = int(2*r+1)

|

| 16 |

+

ch = x.get_shape().as_list()[-1]

|

| 17 |

+

weight = 1/(k_size**2)

|

| 18 |

+

box_kernel = weight*np.ones((k_size, k_size, ch, 1))

|

| 19 |

+

box_kernel = np.array(box_kernel).astype(np.float32)

|

| 20 |

+

output = tf.nn.depthwise_conv2d(x, box_kernel, [1, 1, 1, 1], 'SAME')

|

| 21 |

+

return output

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

def guided_filter(x, y, r, eps=1e-2):

|

| 26 |

+

|

| 27 |

+

x_shape = tf.shape(x)

|

| 28 |

+

#y_shape = tf.shape(y)

|

| 29 |

+

|

| 30 |

+

N = gf.tf_box_filter(tf.ones((1, x_shape[1], x_shape[2], 1), dtype=x.dtype), r)

|

| 31 |

+

|

| 32 |

+

mean_x = gf.tf_box_filter(x, r) / N

|

| 33 |

+

mean_y = gf.tf_box_filter(y, r) / N

|

| 34 |

+

cov_xy = gf.tf_box_filter(x * y, r) / N - mean_x * mean_y

|

| 35 |

+

var_x = gf.tf_box_filter(x * x, r) / N - mean_x * mean_x

|

| 36 |

+

|

| 37 |

+

A = cov_xy / (var_x + eps)

|

| 38 |

+

b = mean_y - A * mean_x

|

| 39 |

+

|

| 40 |

+

mean_A = gf.tf_box_filter(A, r) / N

|

| 41 |

+

mean_b = gf.tf_box_filter(b, r) / N

|

| 42 |

+

|

| 43 |

+

output = tf.add(mean_A * x, mean_b, name='final_add')

|

| 44 |

+

|

| 45 |

+

return output

|

| 46 |

+

|

| 47 |

+

|

| 48 |

+

|

| 49 |

+

def fast_guided_filter(lr_x, lr_y, hr_x, r=1, eps=1e-8):

|

| 50 |

+

|

| 51 |

+

#assert lr_x.shape.ndims == 4 and lr_y.shape.ndims == 4 and hr_x.shape.ndims == 4

|

| 52 |

+

|

| 53 |

+

lr_x_shape = tf.shape(lr_x)

|

| 54 |

+

#lr_y_shape = tf.shape(lr_y)

|

| 55 |

+

hr_x_shape = tf.shape(hr_x)

|

| 56 |

+

|

| 57 |

+

N = gf.tf_box_filter(tf.ones((1, lr_x_shape[1], lr_x_shape[2], 1), dtype=lr_x.dtype), r)

|

| 58 |

+

|

| 59 |

+

mean_x = gf.tf_box_filter(lr_x, r) / N

|

| 60 |

+

mean_y = gf.tf_box_filter(lr_y, r) / N

|

| 61 |

+

cov_xy = gf.tf_box_filter(lr_x * lr_y, r) / N - mean_x * mean_y

|

| 62 |

+

var_x = gf.tf_box_filter(lr_x * lr_x, r) / N - mean_x * mean_x

|

| 63 |

+

|

| 64 |

+

A = cov_xy / (var_x + eps)

|

| 65 |

+

b = mean_y - A * mean_x

|

| 66 |

+

|

| 67 |

+

mean_A = tf.image.resize_images(A, hr_x_shape[1: 3])

|

| 68 |

+

mean_b = tf.image.resize_images(b, hr_x_shape[1: 3])

|

| 69 |

+

|

| 70 |

+

output = mean_A * hr_x + mean_b

|

| 71 |

+

|

| 72 |

+

return output

|

| 73 |

+

|

methods/white_box_cartoonizer/components/network.py

ADDED

|

@@ -0,0 +1,72 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

Code copyrights are with: https://github.com/SystemErrorWang/White-box-Cartoonization/

|

| 3 |

+

|

| 4 |

+

To adapt the code with tensorflow v2 changes obtained from: https://github.com/steubk/White-box-Cartoonization

|

| 5 |

+

"""

|

| 6 |

+

try:

|

| 7 |

+

import tensorflow.compat.v1 as tf

|

| 8 |

+

import tf_slim as slim

|

| 9 |

+

except ImportError:

|

| 10 |

+

import tensorflow as tf

|

| 11 |

+

import tensorflow.contrib.slim as slim

|

| 12 |

+

|

| 13 |

+

import numpy as np

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

class nk:

|

| 17 |

+

def resblock(inputs, out_channel=32, name='resblock'):

|

| 18 |

+

|

| 19 |

+

with tf.variable_scope(name):

|

| 20 |

+

|

| 21 |

+

x = slim.convolution2d(inputs, out_channel, [3, 3],

|

| 22 |

+

activation_fn=None, scope='conv1')

|

| 23 |

+

x = tf.nn.leaky_relu(x)

|

| 24 |

+

x = slim.convolution2d(x, out_channel, [3, 3],

|

| 25 |

+

activation_fn=None, scope='conv2')

|

| 26 |

+

|

| 27 |

+

return x + inputs

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

def unet_generator(inputs, channel=32, num_blocks=4, name='generator', reuse=False):

|

| 33 |

+

with tf.variable_scope(name, reuse=reuse):

|

| 34 |

+

|

| 35 |

+

x0 = slim.convolution2d(inputs, channel, [7, 7], activation_fn=None)

|

| 36 |

+

x0 = tf.nn.leaky_relu(x0)

|

| 37 |

+

|

| 38 |

+

x1 = slim.convolution2d(x0, channel, [3, 3], stride=2, activation_fn=None)

|

| 39 |

+

x1 = tf.nn.leaky_relu(x1)

|

| 40 |

+

x1 = slim.convolution2d(x1, channel*2, [3, 3], activation_fn=None)

|

| 41 |

+

x1 = tf.nn.leaky_relu(x1)

|

| 42 |

+

|

| 43 |

+

x2 = slim.convolution2d(x1, channel*2, [3, 3], stride=2, activation_fn=None)

|

| 44 |

+

x2 = tf.nn.leaky_relu(x2)

|

| 45 |

+

x2 = slim.convolution2d(x2, channel*4, [3, 3], activation_fn=None)

|

| 46 |

+

x2 = tf.nn.leaky_relu(x2)

|

| 47 |

+

|

| 48 |

+

for idx in range(num_blocks):

|

| 49 |

+

x2 = nk.resblock(x2, out_channel=channel*4, name='block_{}'.format(idx))

|

| 50 |

+

|

| 51 |

+

x2 = slim.convolution2d(x2, channel*2, [3, 3], activation_fn=None)

|

| 52 |

+

x2 = tf.nn.leaky_relu(x2)

|

| 53 |

+

|

| 54 |

+

h1, w1 = tf.shape(x2)[1], tf.shape(x2)[2]

|

| 55 |

+

x3 = tf.image.resize_bilinear(x2, (h1*2, w1*2))

|

| 56 |

+

x3 = slim.convolution2d(x3+x1, channel*2, [3, 3], activation_fn=None)

|

| 57 |

+

x3 = tf.nn.leaky_relu(x3)

|

| 58 |

+

x3 = slim.convolution2d(x3, channel, [3, 3], activation_fn=None)

|

| 59 |

+

x3 = tf.nn.leaky_relu(x3)

|

| 60 |

+

|

| 61 |

+

h2, w2 = tf.shape(x3)[1], tf.shape(x3)[2]

|

| 62 |

+

x4 = tf.image.resize_bilinear(x3, (h2*2, w2*2))

|

| 63 |

+

x4 = slim.convolution2d(x4+x0, channel, [3, 3], activation_fn=None)

|

| 64 |

+

x4 = tf.nn.leaky_relu(x4)

|

| 65 |

+

x4 = slim.convolution2d(x4, 3, [7, 7], activation_fn=None)

|

| 66 |

+

|

| 67 |

+

return x4

|

| 68 |

+

|

| 69 |

+

if __name__ == '__main__':

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

pass

|

methods/white_box_cartoonizer/saved_models/checkpoint

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

model_checkpoint_path: "model-33999"

|

| 2 |

+

all_model_checkpoint_paths: "model-33999"

|

| 3 |

+

all_model_checkpoint_paths: "model-37499"

|

methods/white_box_cartoonizer/saved_models/model-33999.index

ADDED

|

Binary file (1.56 kB). View file

|

|

|

methods/white_box_cartoonizer/test.jpg

ADDED

|

output/result_0.png

ADDED

|

output/result_mask_0.png

ADDED

|

requirements.txt

ADDED

|

@@ -0,0 +1,15 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

pip

|

| 2 |

+

tensorflow

|

| 3 |

+

Flask

|

| 4 |

+

gunicorn

|

| 5 |

+

Pillow

|

| 6 |

+

opencv_python

|

| 7 |

+

google-cloud-storage

|

| 8 |

+

algorithmia

|

| 9 |

+

scikit-video

|

| 10 |

+

tf_slim

|

| 11 |

+

PyYaml

|

| 12 |

+

flask-ngrok

|

| 13 |

+

paddlepaddle

|

| 14 |

+

paddlehub

|

| 15 |

+

numpy==1.19.5

|

src/GAN.py

ADDED

|

@@ -0,0 +1,202 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import cv2

|

| 3 |

+

import keras

|

| 4 |

+

import warnings

|

| 5 |

+

import numpy as np

|

| 6 |

+

from PIL import Image

|

| 7 |

+

import matplotlib.pyplot as plt

|

| 8 |

+

|

| 9 |

+

from tensorflow.keras.optimizers import Adam

|

| 10 |

+

from tensorflow.keras.models import Sequential, Model

|

| 11 |

+

from tensorflow.keras.layers import Dense, LeakyReLU, Reshape, Flatten, Input

|

| 12 |

+

from tensorflow.keras.layers import Conv2D, MaxPooling2D, Activation, Dropout, Conv2DTranspose

|

| 13 |

+

|

| 14 |

+

from tensorflow.compat.v1.keras.layers import BatchNormalization

|

| 15 |

+

|

| 16 |

+

images = []

|

| 17 |

+

def load_images(size=(64,64)):

|

| 18 |

+

pixed_faces = os.listdir("kaggle/working/results/pixed_faces")

|

| 19 |

+

images_Path = "kaggle/working/results/pixed_faces"

|

| 20 |

+

for i in pixed_faces:

|

| 21 |

+

try:

|

| 22 |

+

image = cv2.imread(f"{images_Path}/{i}")

|

| 23 |

+

image = cv2.resize(image,size)

|

| 24 |

+

images.append(cv2.cvtColor(image, cv2.COLOR_BGR2RGB))

|

| 25 |

+

except:

|

| 26 |

+

pass

|

| 27 |

+

|

| 28 |

+

load_images()

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

#--------vvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvv

|

| 32 |

+

#Author: https://www.kaggle.com/nassimyagoub

|

| 33 |

+

#--------^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

|

| 34 |

+

def __init__(self):

|

| 35 |

+

self.img_shape = (64, 64, 3)

|

| 36 |

+

|

| 37 |

+

self.noise_size = 100

|

| 38 |

+

|

| 39 |

+

optimizer = Adam(0.0002,0.5)

|

| 40 |

+

|

| 41 |

+

self.discriminator = self.build_discriminator()

|

| 42 |

+

self.discriminator.compile(loss='binary_crossentropy',

|

| 43 |

+

optimizer=optimizer,

|

| 44 |

+

metrics=['accuracy'])

|

| 45 |

+

|

| 46 |

+

self.generator = self.build_generator()

|

| 47 |

+

self.generator.compile(loss='binary_crossentropy', optimizer=optimizer)

|

| 48 |

+

|

| 49 |

+

self.combined = Sequential()

|

| 50 |

+

self.combined.add(self.generator)

|

| 51 |

+

self.combined.add(self.discriminator)

|

| 52 |

+

|

| 53 |

+

self.discriminator.trainable = False

|

| 54 |

+

|

| 55 |

+

self.combined.compile(loss='binary_crossentropy', optimizer=optimizer)

|

| 56 |

+

|

| 57 |

+

self.combined.summary()

|

| 58 |

+

|

| 59 |

+

def build_generator(self):

|

| 60 |

+

epsilon = 0.00001

|

| 61 |

+

noise_shape = (self.noise_size,)

|

| 62 |

+

|

| 63 |

+

model = Sequential()

|

| 64 |

+

|

| 65 |

+

model.add(Dense(4*4*512, activation='linear', input_shape=noise_shape))

|

| 66 |

+

model.add(LeakyReLU(alpha=0.2))

|

| 67 |

+

model.add(Reshape((4, 4, 512)))

|

| 68 |

+

|

| 69 |

+

model.add(Conv2DTranspose(512, kernel_size=[4,4], strides=[2,2], padding="same",

|

| 70 |

+

kernel_initializer= keras.initializers.TruncatedNormal(stddev=0.02)))

|

| 71 |

+

model.add(BatchNormalization(momentum=0.9, epsilon=epsilon))

|

| 72 |

+

model.add(LeakyReLU(alpha=0.2))

|

| 73 |

+

|

| 74 |

+

model.add(Conv2DTranspose(256, kernel_size=[4,4], strides=[2,2], padding="same",

|

| 75 |

+

kernel_initializer= keras.initializers.TruncatedNormal(stddev=0.02)))

|

| 76 |

+

model.add(BatchNormalization(momentum=0.9, epsilon=epsilon))

|

| 77 |

+

model.add(LeakyReLU(alpha=0.2))

|

| 78 |

+

|

| 79 |

+

model.add(Conv2DTranspose(128, kernel_size=[4,4], strides=[2,2], padding="same",

|

| 80 |

+

kernel_initializer= keras.initializers.TruncatedNormal(stddev=0.02)))

|

| 81 |

+

model.add(BatchNormalization(momentum=0.9, epsilon=epsilon))

|

| 82 |

+

model.add(LeakyReLU(alpha=0.2))

|

| 83 |

+

|

| 84 |

+

model.add(Conv2DTranspose(64, kernel_size=[4,4], strides=[2,2], padding="same",

|

| 85 |

+

kernel_initializer= keras.initializers.TruncatedNormal(stddev=0.02)))

|

| 86 |

+

model.add(BatchNormalization(momentum=0.9, epsilon=epsilon))

|

| 87 |

+

model.add(LeakyReLU(alpha=0.2))

|

| 88 |

+

|

| 89 |

+

model.add(Conv2DTranspose(3, kernel_size=[4,4], strides=[1,1], padding="same",

|

| 90 |

+

kernel_initializer= keras.initializers.TruncatedNormal(stddev=0.02)))

|

| 91 |

+

|

| 92 |

+

model.add(Activation("tanh"))

|

| 93 |

+

|

| 94 |

+

model.summary()

|

| 95 |

+

|

| 96 |

+

noise = Input(shape=noise_shape)

|

| 97 |

+

img = model(noise)

|

| 98 |

+

|

| 99 |

+

return Model(noise, img)

|

| 100 |

+

|

| 101 |

+

def build_discriminator(self):

|

| 102 |

+

|

| 103 |

+

model = Sequential()

|

| 104 |

+

|

| 105 |

+

model.add(Conv2D(128, (3,3), padding='same', input_shape=self.img_shape))

|

| 106 |

+

model.add(LeakyReLU(alpha=0.2))

|

| 107 |

+

model.add(BatchNormalization())

|

| 108 |

+

model.add(Conv2D(128, (3,3), padding='same'))

|

| 109 |

+

model.add(LeakyReLU(alpha=0.2))

|

| 110 |

+

model.add(BatchNormalization())

|

| 111 |

+

model.add(MaxPooling2D(pool_size=(3,3)))

|

| 112 |

+

model.add(Dropout(0.2))

|

| 113 |

+

|

| 114 |

+

model.add(Conv2D(128, (3,3), padding='same'))

|

| 115 |

+

model.add(LeakyReLU(alpha=0.2))

|

| 116 |

+

model.add(BatchNormalization())

|

| 117 |

+

model.add(Conv2D(128, (3,3), padding='same'))

|

| 118 |

+

model.add(LeakyReLU(alpha=0.2))

|

| 119 |

+

model.add(BatchNormalization())

|

| 120 |

+

model.add(MaxPooling2D(pool_size=(3,3)))

|

| 121 |

+

model.add(Dropout(0.3))

|

| 122 |

+

|

| 123 |

+

model.add(Flatten())

|

| 124 |

+

model.add(Dense(128))

|

| 125 |

+

model.add(LeakyReLU(alpha=0.2))

|

| 126 |

+

model.add(Dense(128))

|

| 127 |

+

model.add(LeakyReLU(alpha=0.2))

|

| 128 |

+

model.add(Dense(1, activation='sigmoid'))

|

| 129 |

+

|

| 130 |

+

model.summary()

|

| 131 |

+

|

| 132 |

+

img = Input(shape=self.img_shape)

|

| 133 |

+

validity = model(img)

|

| 134 |

+

|

| 135 |

+

return Model(img, validity)

|

| 136 |

+

|

| 137 |

+

def train(self, epochs, batch_size=128, metrics_update=50, save_images=100, save_model=2000):

|

| 138 |

+

|

| 139 |

+

X_train = np.array(images)

|

| 140 |

+

X_train = (X_train.astype(np.float32) - 127.5) / 127.5

|

| 141 |

+

|

| 142 |

+

half_batch = int(batch_size / 2)

|

| 143 |

+

|

| 144 |

+

mean_d_loss=[0,0]

|

| 145 |

+

mean_g_loss=0

|

| 146 |

+

|

| 147 |

+

for epoch in range(epochs):

|

| 148 |

+

idx = np.random.randint(0, X_train.shape[0], half_batch)

|

| 149 |

+

imgs = X_train[idx]

|

| 150 |

+

|

| 151 |

+

noise = np.random.normal(0, 1, (half_batch, self.noise_size))

|

| 152 |

+

gen_imgs = self.generator.predict(noise)

|

| 153 |

+

|

| 154 |

+

|

| 155 |

+

|

| 156 |

+

|

| 157 |

+

d_loss = 0.5 * np.add(self.discriminator.train_on_batch(imgs, np.ones((half_batch, 1))),

|

| 158 |

+

self.discriminator.train_on_batch(gen_imgs, np.zeros((half_batch, 1))))

|

| 159 |

+

|

| 160 |

+

|

| 161 |

+

noise = np.random.normal(0, 1, (batch_size, self.noise_size))

|

| 162 |

+

|

| 163 |

+

valid_y = np.array([1] * batch_size)

|

| 164 |

+

g_loss = self.combined.train_on_batch(noise, valid_y)

|

| 165 |

+

|

| 166 |

+

mean_d_loss[0] += d_loss[0]

|

| 167 |

+

mean_d_loss[1] += d_loss[1]

|

| 168 |

+

mean_g_loss += g_loss

|

| 169 |

+

|

| 170 |

+

|

| 171 |

+

if epoch % metrics_update == 0:

|

| 172 |

+

print ("%d [Discriminator loss: %f, acc.: %.2f%%] [Generator loss: %f]" % (epoch, mean_d_loss[0]/metrics_update, 100*mean_d_loss[1]/metrics_update, mean_g_loss/metrics_update))

|

| 173 |

+

mean_d_loss=[0,0]

|

| 174 |

+

mean_g_loss=0

|

| 175 |

+

|

| 176 |

+

if epoch % save_images == 0:

|

| 177 |

+

self.save_images(epoch)

|

| 178 |

+

|

| 179 |

+

|

| 180 |

+

if epoch % save_model == 0:

|

| 181 |

+

self.generator.save("kaggle/working/results/generators/generator_%d" % epoch)

|

| 182 |

+

self.discriminator.save("kaggle/working/results/discriminators/discriminator_%d" % epoch)

|

| 183 |

+

|

| 184 |

+

|

| 185 |

+

def save_images(self, epoch):

|

| 186 |

+

noise = np.random.normal(0, 1, (25, self.noise_size))

|

| 187 |

+

gen_imgs = self.generator.predict(noise)

|

| 188 |

+

|

| 189 |

+

|

| 190 |

+

gen_imgs = 0.5 * gen_imgs + 0.5

|

| 191 |

+

|

| 192 |

+

fig, axs = plt.subplots(5,5, figsize = (8,8))

|

| 193 |

+

|

| 194 |

+

for i in range(5):

|

| 195 |

+

for j in range(5):

|

| 196 |

+

axs[i,j].imshow(gen_imgs[5*i+j])

|

| 197 |

+

axs[i,j].axis('off')

|

| 198 |

+

|

| 199 |

+

plt.show()

|

| 200 |

+

|

| 201 |

+

fig.savefig("kaggle/working/results/pandaS_%d.png" % epoch)

|

| 202 |

+

plt.close()

|