Spaces:

Runtime error

Runtime error

init repo

Browse files- README.md +3 -3

- app.py +58 -0

- constants.py +25 -0

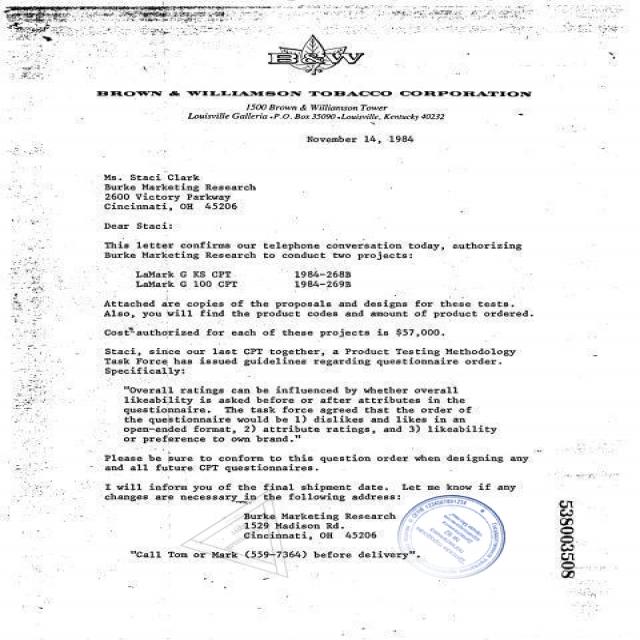

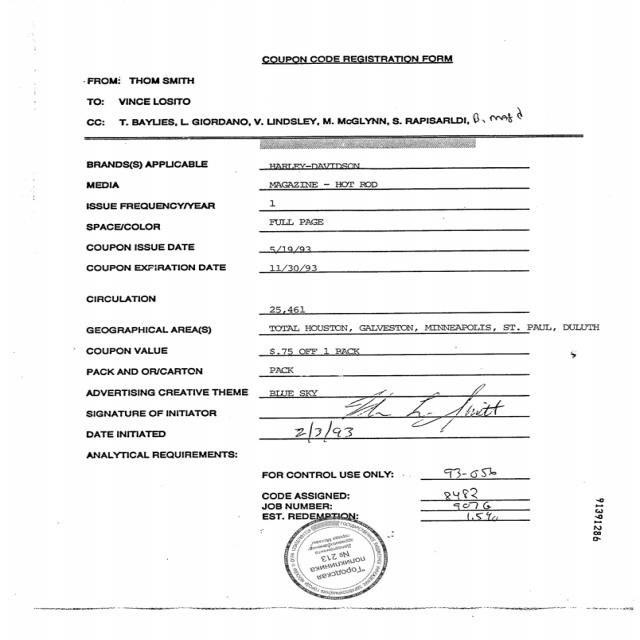

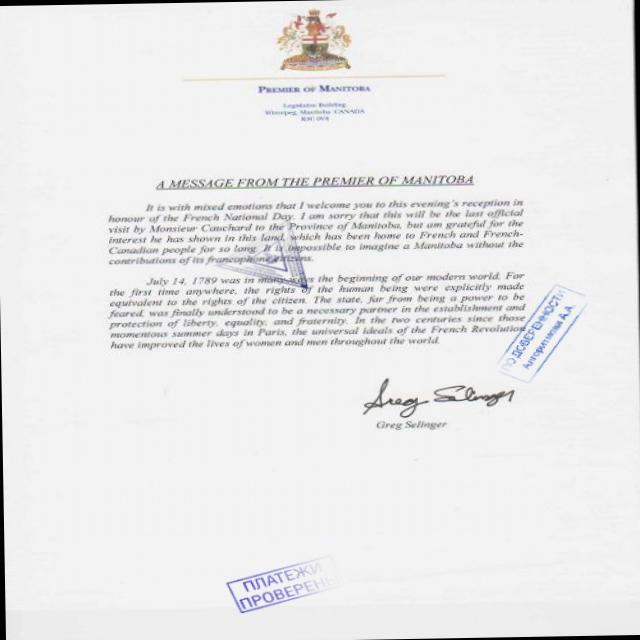

- examples/1.jpg +0 -0

- examples/2.jpg +0 -0

- examples/3.jpg +0 -0

- model.pth +3 -0

- model.py +80 -0

- requirements.txt +4 -0

- utils.py +259 -0

README.md

CHANGED

|

@@ -1,8 +1,8 @@

|

|

| 1 |

---

|

| 2 |

title: Stamp Detection

|

| 3 |

-

emoji:

|

| 4 |

-

colorFrom:

|

| 5 |

-

colorTo:

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 3.35.2

|

| 8 |

app_file: app.py

|

|

|

|

| 1 |

---

|

| 2 |

title: Stamp Detection

|

| 3 |

+

emoji: 📜

|

| 4 |

+

colorFrom: yellow

|

| 5 |

+

colorTo: gray

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 3.35.2

|

| 8 |

app_file: app.py

|

app.py

ADDED

|

@@ -0,0 +1,58 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# AUTOGENERATED! DO NOT EDIT! File to edit: ../app.ipynb.

|

| 2 |

+

|

| 3 |

+

# %% auto 0

|

| 4 |

+

__all__ = ['device', 'model', 'transforms', 'image', 'result', 'examples', 'intf', 'detect_stamps']

|

| 5 |

+

|

| 6 |

+

# %% ../app.ipynb 1

|

| 7 |

+

from model import YOLOStamp

|

| 8 |

+

from utils import *

|

| 9 |

+

import torch

|

| 10 |

+

import gradio as gr

|

| 11 |

+

import albumentations as A

|

| 12 |

+

from albumentations.pytorch.transforms import ToTensorV2

|

| 13 |

+

from PIL import Image

|

| 14 |

+

|

| 15 |

+

# %% ../app.ipynb 2

|

| 16 |

+

device = "cuda" if torch.cuda.is_available() else "cpu"

|

| 17 |

+

|

| 18 |

+

model = YOLOStamp()

|

| 19 |

+

model.load_state_dict(torch.load('model.pth', map_location=torch.device('cpu')))

|

| 20 |

+

model = model.to(device)

|

| 21 |

+

model.eval()

|

| 22 |

+

|

| 23 |

+

# %% ../app.ipynb 3

|

| 24 |

+

transforms = A.Compose([

|

| 25 |

+

A.Resize(height=448, width=448),

|

| 26 |

+

A.Normalize(),

|

| 27 |

+

ToTensorV2(p=1.0),

|

| 28 |

+

])

|

| 29 |

+

|

| 30 |

+

# %% ../app.ipynb 7

|

| 31 |

+

def detect_stamps(image):

|

| 32 |

+

shape = image.size[:2]

|

| 33 |

+

image = image.convert('RGB')

|

| 34 |

+

image = np.array(image)

|

| 35 |

+

image = transforms(image=image)['image']

|

| 36 |

+

|

| 37 |

+

output = model(image.unsqueeze(0).to(device))[0]

|

| 38 |

+

boxes = output_tensor_to_boxes(output.detach().cpu())

|

| 39 |

+

boxes = nonmax_suppression(boxes)

|

| 40 |

+

img = image.permute(1, 2, 0).cpu().numpy()

|

| 41 |

+

img = visualize_bbox(img.copy(), boxes=boxes, draw_center=False)

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

img = cv2.resize(img, dsize=shape)

|

| 45 |

+

|

| 46 |

+

return Image.fromarray((255. * (img * np.array(STD) + np.array(MEAN))).astype(np.uint8))

|

| 47 |

+

|

| 48 |

+

# %% ../app.ipynb 9

|

| 49 |

+

image = gr.inputs.Image(type="pil")

|

| 50 |

+

result = gr.outputs.Image(type="pil")

|

| 51 |

+

examples = ['./examples/1.jpg', './examples/2.jpg', './examples/3.jpg']

|

| 52 |

+

|

| 53 |

+

intf = gr.Interface(fn=detect_stamps,

|

| 54 |

+

inputs=image,

|

| 55 |

+

outputs=result,

|

| 56 |

+

title='Stamp detection',

|

| 57 |

+

examples=examples)

|

| 58 |

+

intf.launch(inline=False)

|

constants.py

ADDED

|

@@ -0,0 +1,25 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# shape of input image to YOLO

|

| 2 |

+

W, H = 448, 448

|

| 3 |

+

# grid size after last convolutional layer of YOLO

|

| 4 |

+

S = 7

|

| 5 |

+

# anchors of YOLO model

|

| 6 |

+

ANCHORS = [[1.08,1.19],

|

| 7 |

+

[3.42,4.41],

|

| 8 |

+

[16.62,10.52]]

|

| 9 |

+

# number of anchors boxes

|

| 10 |

+

BOX = len(ANCHORS)

|

| 11 |

+

# maximum number of stamps on image

|

| 12 |

+

STAMP_NB_MAX = 10

|

| 13 |

+

# minimal confidence of presence a stamp in the grid cell

|

| 14 |

+

OUTPUT_THRESH = 0.76

|

| 15 |

+

# maximal iou score to consider boxes different

|

| 16 |

+

IOU_THRESH = 0.3

|

| 17 |

+

# path to folder containing images

|

| 18 |

+

IMAGE_FOLDER = './data/images'

|

| 19 |

+

# path to .cvs file containing annotations

|

| 20 |

+

ANNOTATIONS_PATH = './data/all_annotations.csv'

|

| 21 |

+

# standard deviation and mean of pixel values for normalization

|

| 22 |

+

STD = (0.229, 0.224, 0.225)

|

| 23 |

+

MEAN = (0.485, 0.456, 0.406)

|

| 24 |

+

# box color to show the bounding box on image

|

| 25 |

+

BOX_COLOR = (0, 0, 255)

|

examples/1.jpg

ADDED

|

examples/2.jpg

ADDED

|

examples/3.jpg

ADDED

|

model.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e3e633824bea418bf44bb82f8d5a667b9d7d29b913079c4d8b6ca217f6f383e7

|

| 3 |

+

size 502816

|

model.py

ADDED

|

@@ -0,0 +1,80 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

import torch.nn as nn

|

| 3 |

+

|

| 4 |

+

from constants import *

|

| 5 |

+

|

| 6 |

+

"""

|

| 7 |

+

Class for custom activation.

|

| 8 |

+

"""

|

| 9 |

+

class SymReLU(nn.Module):

|

| 10 |

+

def __init__(self, inplace: bool = False):

|

| 11 |

+

super().__init__()

|

| 12 |

+

self.inplace = inplace

|

| 13 |

+

|

| 14 |

+

def forward(self, input):

|

| 15 |

+

return torch.min(torch.max(input, -torch.ones_like(input)), torch.ones_like(input))

|

| 16 |

+

|

| 17 |

+

def extra_repr(self) -> str:

|

| 18 |

+

inplace_str = 'inplace=True' if self.inplace else ''

|

| 19 |

+

return inplace_str

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

"""

|

| 23 |

+

Class implementing YOLO-Stamp architecture described in https://link.springer.com/article/10.1134/S1054661822040046.

|

| 24 |

+

"""

|

| 25 |

+

class YOLOStamp(nn.Module):

|

| 26 |

+

def __init__(

|

| 27 |

+

self,

|

| 28 |

+

anchors=ANCHORS,

|

| 29 |

+

in_channels=3,

|

| 30 |

+

):

|

| 31 |

+

super().__init__()

|

| 32 |

+

|

| 33 |

+

self.register_buffer('anchors', torch.tensor(anchors))

|

| 34 |

+

|

| 35 |

+

self.act = SymReLU()

|

| 36 |

+

self.pool = nn.MaxPool2d(kernel_size=2, stride=2)

|

| 37 |

+

self.conv1 = nn.Conv2d(in_channels=in_channels, out_channels=8, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

|

| 38 |

+

self.norm1 = nn.BatchNorm2d(num_features=8)

|

| 39 |

+

self.conv2 = nn.Conv2d(in_channels=8, out_channels=16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

|

| 40 |

+

self.norm2 = nn.BatchNorm2d(num_features=16)

|

| 41 |

+

self.conv3 = nn.Conv2d(in_channels=16, out_channels=16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

|

| 42 |

+

self.norm3 = nn.BatchNorm2d(num_features=16)

|

| 43 |

+

self.conv4 = nn.Conv2d(in_channels=16, out_channels=16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

|

| 44 |

+

self.norm4 = nn.BatchNorm2d(num_features=16)

|

| 45 |

+

self.conv5 = nn.Conv2d(in_channels=16, out_channels=16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

|

| 46 |

+

self.norm5 = nn.BatchNorm2d(num_features=16)

|

| 47 |

+

self.conv6 = nn.Conv2d(in_channels=16, out_channels=24, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

|

| 48 |

+

self.norm6 = nn.BatchNorm2d(num_features=24)

|

| 49 |

+

self.conv7 = nn.Conv2d(in_channels=24, out_channels=24, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

|

| 50 |

+

self.norm7 = nn.BatchNorm2d(num_features=24)

|

| 51 |

+

self.conv8 = nn.Conv2d(in_channels=24, out_channels=48, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

|

| 52 |

+

self.norm8 = nn.BatchNorm2d(num_features=48)

|

| 53 |

+

self.conv9 = nn.Conv2d(in_channels=48, out_channels=48, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

|

| 54 |

+

self.norm9 = nn.BatchNorm2d(num_features=48)

|

| 55 |

+

self.conv10 = nn.Conv2d(in_channels=48, out_channels=48, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

|

| 56 |

+

self.norm10 = nn.BatchNorm2d(num_features=48)

|

| 57 |

+

self.conv11 = nn.Conv2d(in_channels=48, out_channels=64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

|

| 58 |

+

self.norm11 = nn.BatchNorm2d(num_features=64)

|

| 59 |

+

self.conv12 = nn.Conv2d(in_channels=64, out_channels=256, kernel_size=(1, 1), stride=(1, 1), padding=(0, 0))

|

| 60 |

+

self.norm12 = nn.BatchNorm2d(num_features=256)

|

| 61 |

+

self.conv13 = nn.Conv2d(in_channels=256, out_channels=len(anchors) * 5, kernel_size=(1, 1), stride=(1, 1), padding=(0, 0))

|

| 62 |

+

|

| 63 |

+

def forward(self, x, head=True):

|

| 64 |

+

x = x.type(self.conv1.weight.dtype)

|

| 65 |

+

x = self.act(self.pool(self.norm1(self.conv1(x))))

|

| 66 |

+

x = self.act(self.pool(self.norm2(self.conv2(x))))

|

| 67 |

+

x = self.act(self.pool(self.norm3(self.conv3(x))))

|

| 68 |

+

x = self.act(self.pool(self.norm4(self.conv4(x))))

|

| 69 |

+

x = self.act(self.pool(self.norm5(self.conv5(x))))

|

| 70 |

+

x = self.act(self.norm6(self.conv6(x)))

|

| 71 |

+

x = self.act(self.norm7(self.conv7(x)))

|

| 72 |

+

x = self.act(self.pool(self.norm8(self.conv8(x))))

|

| 73 |

+

x = self.act(self.norm9(self.conv9(x)))

|

| 74 |

+

x = self.act(self.norm10(self.conv10(x)))

|

| 75 |

+

x = self.act(self.norm11(self.conv11(x)))

|

| 76 |

+

x = self.act(self.norm12(self.conv12(x)))

|

| 77 |

+

x = self.conv13(x)

|

| 78 |

+

nb, _, nh, nw= x.shape

|

| 79 |

+

x = x.permute(0, 2, 3, 1).view(nb, nh, nw, self.anchors.shape[0], 5)

|

| 80 |

+

return x

|

requirements.txt

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

torch

|

| 2 |

+

opencv-python

|

| 3 |

+

pillow

|

| 4 |

+

albumentations

|

utils.py

ADDED

|

@@ -0,0 +1,259 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

import cv2

|

| 3 |

+

import pandas as pd

|

| 4 |

+

import numpy as np

|

| 5 |

+

from pathlib import Path

|

| 6 |

+

import matplotlib.pyplot as plt

|

| 7 |

+

from constants import *

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

def output_tensor_to_boxes(boxes_tensor):

|

| 11 |

+

"""

|

| 12 |

+

Converts the YOLO output tensor to list of boxes with probabilites.

|

| 13 |

+

|

| 14 |

+

Arguments:

|

| 15 |

+

boxes_tensor -- tensor of shape (S, S, BOX, 5)

|

| 16 |

+

|

| 17 |

+

Returns:

|

| 18 |

+

boxes -- list of shape (None, 5)

|

| 19 |

+

|

| 20 |

+

Note: "None" is here because you don't know the exact number of selected boxes, as it depends on the threshold.

|

| 21 |

+

For example, the actual output size of scores would be (10, 5) if there are 10 boxes

|

| 22 |

+

"""

|

| 23 |

+

cell_w, cell_h = W/S, H/S

|

| 24 |

+

boxes = []

|

| 25 |

+

|

| 26 |

+

for i in range(S):

|

| 27 |

+

for j in range(S):

|

| 28 |

+

for b in range(BOX):

|

| 29 |

+

anchor_wh = torch.tensor(ANCHORS[b])

|

| 30 |

+

data = boxes_tensor[i,j,b]

|

| 31 |

+

xy = torch.sigmoid(data[:2])

|

| 32 |

+

wh = torch.exp(data[2:4])*anchor_wh

|

| 33 |

+

obj_prob = torch.sigmoid(data[4])

|

| 34 |

+

|

| 35 |

+

if obj_prob > OUTPUT_THRESH:

|

| 36 |

+

x_center, y_center, w, h = xy[0], xy[1], wh[0], wh[1]

|

| 37 |

+

x, y = x_center+j-w/2, y_center+i-h/2

|

| 38 |

+

x,y,w,h = x*cell_w, y*cell_h, w*cell_w, h*cell_h

|

| 39 |

+

box = [x,y,w,h, obj_prob]

|

| 40 |

+

boxes.append(box)

|

| 41 |

+

return boxes

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

def plot_img(img, size=(7,7)):

|

| 45 |

+

plt.figure(figsize=size)

|

| 46 |

+

plt.imshow(img)

|

| 47 |

+

plt.show()

|

| 48 |

+

|

| 49 |

+

|

| 50 |

+

def plot_normalized_img(img, std=STD, mean=MEAN, size=(7,7)):

|

| 51 |

+

mean = mean if isinstance(mean, np.ndarray) else np.array(mean)

|

| 52 |

+

std = std if isinstance(std, np.ndarray) else np.array(std)

|

| 53 |

+

plt.figure(figsize=size)

|

| 54 |

+

plt.imshow((255. * (img * std + mean)).astype(np.uint))

|

| 55 |

+

plt.show()

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

def visualize_bbox(img, boxes, thickness=2, color=BOX_COLOR, draw_center=True):

|

| 59 |

+

"""

|

| 60 |

+

Draws boxes on the given image.

|

| 61 |

+

|

| 62 |

+

Arguments:

|

| 63 |

+

img -- torch.Tensor of shape (3, W, H) or numpy.ndarray of shape (W, H, 3)

|

| 64 |

+

boxes -- list of shape (None, 5)

|

| 65 |

+

thickness -- number specifying the thickness of box border

|

| 66 |

+

color -- RGB tuple of shape (3,) specifying the color of boxes

|

| 67 |

+

draw_center -- boolean specifying whether to draw center or not

|

| 68 |

+

|

| 69 |

+

Returns:

|

| 70 |

+

img_copy -- numpy.ndarray of shape(W, H, 3) containing image with bouning boxes

|

| 71 |

+

"""

|

| 72 |

+

img_copy = img.cpu().permute(1,2,0).numpy() if isinstance(img, torch.Tensor) else img.copy()

|

| 73 |

+

for box in boxes:

|

| 74 |

+

x,y,w,h = int(box[0]), int(box[1]), int(box[2]), int(box[3])

|

| 75 |

+

img_copy = cv2.rectangle(

|

| 76 |

+

img_copy,

|

| 77 |

+

(x,y),(x+w, y+h),

|

| 78 |

+

color, thickness)

|

| 79 |

+

if draw_center:

|

| 80 |

+

center = (x+w//2, y+h//2)

|

| 81 |

+

img_copy = cv2.circle(img_copy, center=center, radius=3, color=(0,255,0), thickness=2)

|

| 82 |

+

return img_copy

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

def read_data(annotations=Path(ANNOTATIONS_PATH)):

|

| 86 |

+

"""

|

| 87 |

+

Reads annotations data from .csv file. Must contain columns: image_name, bbox_x, bbox_y, bbox_width, bbox_height.

|

| 88 |

+

|

| 89 |

+

Arguments:

|

| 90 |

+

annotations_path -- string or Path specifying path of annotations file

|

| 91 |

+

|

| 92 |

+

Returns:

|

| 93 |

+

data -- list of dictionaries containing path, number of boxes and boxes itself

|

| 94 |

+

"""

|

| 95 |

+

data = []

|

| 96 |

+

|

| 97 |

+

boxes = pd.read_csv(annotations)

|

| 98 |

+

image_names = boxes['image_name'].unique()

|

| 99 |

+

|

| 100 |

+

for image_name in image_names:

|

| 101 |

+

cur_boxes = boxes[boxes['image_name'] == image_name]

|

| 102 |

+

img_data = {

|

| 103 |

+

'file_path': image_name,

|

| 104 |

+

'box_nb': len(cur_boxes),

|

| 105 |

+

'boxes': []}

|

| 106 |

+

stamp_nb = img_data['box_nb']

|

| 107 |

+

if stamp_nb <= STAMP_NB_MAX:

|

| 108 |

+

img_data['boxes'] = cur_boxes[['bbox_x', 'bbox_y','bbox_width','bbox_height']].values

|

| 109 |

+

data.append(img_data)

|

| 110 |

+

return data

|

| 111 |

+

|

| 112 |

+

|

| 113 |

+

def boxes_to_tensor(boxes):

|

| 114 |

+

"""

|

| 115 |

+

Convert list of boxes (and labels) to tensor format

|

| 116 |

+

|

| 117 |

+

Arguments:

|

| 118 |

+

boxes -- list of boxes

|

| 119 |

+

|

| 120 |

+

Returns:

|

| 121 |

+

boxes_tensor -- tensor of shape (S, S, BOX, 5)

|

| 122 |

+

"""

|

| 123 |

+

boxes_tensor = torch.zeros((S, S, BOX, 5))

|

| 124 |

+

cell_w, cell_h = W/S, H/S

|

| 125 |

+

for i, box in enumerate(boxes):

|

| 126 |

+

x, y, w, h = box

|

| 127 |

+

# normalize xywh with cell_size

|

| 128 |

+

x, y, w, h = x / cell_w, y / cell_h, w / cell_w, h / cell_h

|

| 129 |

+

center_x, center_y = x + w / 2, y + h / 2

|

| 130 |

+

grid_x = int(np.floor(center_x))

|

| 131 |

+

grid_y = int(np.floor(center_y))

|

| 132 |

+

|

| 133 |

+

if grid_x < S and grid_y < S:

|

| 134 |

+

boxes_tensor[grid_y, grid_x, :, 0:4] = torch.tensor(BOX * [[center_x - grid_x, center_y - grid_y, w, h]])

|

| 135 |

+

boxes_tensor[grid_y, grid_x, :, 4] = torch.tensor(BOX * [1.])

|

| 136 |

+

return boxes_tensor

|

| 137 |

+

|

| 138 |

+

|

| 139 |

+

def target_tensor_to_boxes(boxes_tensor, output_threshold=OUTPUT_THRESH):

|

| 140 |

+

"""

|

| 141 |

+

Recover target tensor (tensor output of dataset) to bboxes.

|

| 142 |

+

Arguments:

|

| 143 |

+

boxes_tensor -- tensor of shape (S, S, BOX, 5)

|

| 144 |

+

Returns:

|

| 145 |

+

boxes -- list of boxes, each box is [x, y, w, h]

|

| 146 |

+

"""

|

| 147 |

+

cell_w, cell_h = W/S, H/S

|

| 148 |

+

boxes = []

|

| 149 |

+

for i in range(S):

|

| 150 |

+

for j in range(S):

|

| 151 |

+

for b in range(BOX):

|

| 152 |

+

data = boxes_tensor[i,j,b]

|

| 153 |

+

x_center,y_center, w, h, obj_prob = data[0], data[1], data[2], data[3], data[4]

|

| 154 |

+

if obj_prob > output_threshold:

|

| 155 |

+

x, y = x_center+j-w/2, y_center+i-h/2

|

| 156 |

+

x,y,w,h = x*cell_w, y*cell_h, w*cell_w, h*cell_h

|

| 157 |

+

box = [x,y,w,h]

|

| 158 |

+

boxes.append(box)

|

| 159 |

+

return boxes

|

| 160 |

+

|

| 161 |

+

|

| 162 |

+

def overlap(interval_1, interval_2):

|

| 163 |

+

"""

|

| 164 |

+

Calculates length of overlap between two intervals.

|

| 165 |

+

|

| 166 |

+

Arguments:

|

| 167 |

+

interval_1 -- list or tuple of shape (2,) containing endpoints of the first interval

|

| 168 |

+

interval_2 -- list or tuple of shape (2, 2) containing endpoints of the second interval

|

| 169 |

+

|

| 170 |

+

Returns:

|

| 171 |

+

overlap -- length of overlap

|

| 172 |

+

"""

|

| 173 |

+

x1, x2 = interval_1

|

| 174 |

+

x3, x4 = interval_2

|

| 175 |

+

if x3 < x1:

|

| 176 |

+

if x4 < x1:

|

| 177 |

+

return 0

|

| 178 |

+

else:

|

| 179 |

+

return min(x2,x4) - x1

|

| 180 |

+

else:

|

| 181 |

+

if x2 < x3:

|

| 182 |

+

return 0

|

| 183 |

+

else:

|

| 184 |

+

return min(x2,x4) - x3

|

| 185 |

+

|

| 186 |

+

|

| 187 |

+

def compute_iou(box1, box2):

|

| 188 |

+

"""

|

| 189 |

+

Compute IOU between box1 and box2.

|

| 190 |

+

|

| 191 |

+

Argmunets:

|

| 192 |

+

box1 -- list of shape (5, ). Represents the first box

|

| 193 |

+

box2 -- list of shape (5, ). Represents the second box

|

| 194 |

+

Each box is [x, y, w, h, prob]

|

| 195 |

+

|

| 196 |

+

Returns:

|

| 197 |

+

iou -- intersection over union score between two boxes

|

| 198 |

+

"""

|

| 199 |

+

x1,y1,w1,h1 = box1[0], box1[1], box1[2], box1[3]

|

| 200 |

+

x2,y2,w2,h2 = box2[0], box2[1], box2[2], box2[3]

|

| 201 |

+

|

| 202 |

+

area1, area2 = w1*h1, w2*h2

|

| 203 |

+

intersect_w = overlap((x1,x1+w1), (x2,x2+w2))

|

| 204 |

+

intersect_h = overlap((y1,y1+h1), (y2,y2+w2))

|

| 205 |

+

if intersect_w == w1 and intersect_h == h1 or intersect_w == w2 and intersect_h == h2:

|

| 206 |

+

return 1.

|

| 207 |

+

intersect_area = intersect_w*intersect_h

|

| 208 |

+

iou = intersect_area/(area1 + area2 - intersect_area)

|

| 209 |

+

return iou

|

| 210 |

+

|

| 211 |

+

|

| 212 |

+

def nonmax_suppression(boxes, iou_thresh = IOU_THRESH):

|

| 213 |

+

"""

|

| 214 |

+

Removes ovelap bboxes

|

| 215 |

+

|

| 216 |

+

Arguments:

|

| 217 |

+

boxes -- list of shape (None, 5)

|

| 218 |

+

iou_thresh -- maximal value of iou when boxes are considered different

|

| 219 |

+

Each box is [x, y, w, h, prob]

|

| 220 |

+

|

| 221 |

+

Returns:

|

| 222 |

+

boxes -- list of shape (None, 5) with removed overlapping boxes

|

| 223 |

+

"""

|

| 224 |

+

boxes = sorted(boxes, key=lambda x: x[4], reverse=True)

|

| 225 |

+

for i, current_box in enumerate(boxes):

|

| 226 |

+

if current_box[4] <= 0:

|

| 227 |

+

continue

|

| 228 |

+

for j in range(i+1, len(boxes)):

|

| 229 |

+

iou = compute_iou(current_box, boxes[j])

|

| 230 |

+

if iou > iou_thresh:

|

| 231 |

+

boxes[j][4] = 0

|

| 232 |

+

boxes = [box for box in boxes if box[4] > 0]

|

| 233 |

+

return boxes

|

| 234 |

+

|

| 235 |

+

|

| 236 |

+

|

| 237 |

+

def yolo_head(yolo_output):

|

| 238 |

+

"""

|

| 239 |

+

Converts a yolo output tensor to separate tensors of coordinates, shapes and probabilities.

|

| 240 |

+

|

| 241 |

+

Arguments:

|

| 242 |

+

yolo_output -- tensor of shape (batch_size, S, S, BOX, 5)

|

| 243 |

+

|

| 244 |

+

Returns:

|

| 245 |

+

xy -- tensor of shape (batch_size, S, S, BOX, 2) containing coordinates of centers of found boxes for each anchor in each grid cell

|

| 246 |

+

wh -- tensor of shape (batch_size, S, S, BOX, 2) containing width and height of found boxes for each anchor in each grid cell

|

| 247 |

+

prob -- tensor of shape (batch_size, S, S, BOX, 1) containing the probability of presence of boxes for each anchor in each grid cell

|

| 248 |

+

"""

|

| 249 |

+

xy = torch.sigmoid(yolo_output[..., 0:2])

|

| 250 |

+

anchors_wh = torch.tensor(ANCHORS, device=yolo_output.device).view(1, 1, 1, len(ANCHORS), 2)

|

| 251 |

+

wh = torch.exp(yolo_output[..., 2:4]) * anchors_wh

|

| 252 |

+

prob = torch.sigmoid(yolo_output[..., 4:5])

|

| 253 |

+

return xy, wh, prob

|

| 254 |

+

|

| 255 |

+

def process_target(target):

|

| 256 |

+

xy = target[..., 0:2]

|

| 257 |

+

wh = target[..., 2:4]

|

| 258 |

+

prob = target[..., 4:5]

|

| 259 |

+

return xy, wh, prob

|