FORK of FLAN-T5 XXL

This is a fork of google/flan-t5-xxl implementing a custom

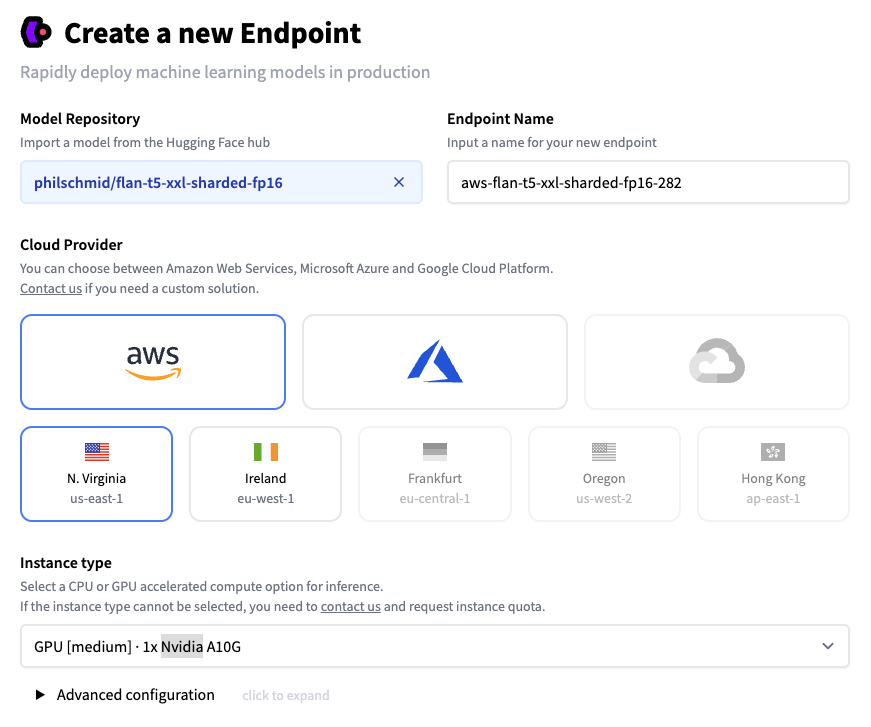

handler.pyas an example for how to use t5-11b with inference-endpoints on a single NVIDIA A10G.

You can deploy the flan-t5-xxl with a 1-click. Since we are using the "quantized" version, we can switch our instance type to "GPU [medium] · 1x Nvidia A10G".

TL;DR

If you already know T5, FLAN-T5 is just better at everything. For the same number of parameters, these models have been fine-tuned on more than 1000 additional tasks covering also more languages. As mentioned in the first few lines of the abstract :

Flan-PaLM 540B achieves state-of-the-art performance on several benchmarks, such as 75.2% on five-shot MMLU. We also publicly release Flan-T5 checkpoints,1 which achieve strong few-shot performance even compared to much larger models, such as PaLM 62B. Overall, instruction finetuning is a general method for improving the performance and usability of pretrained language models.

Disclaimer: Content from this model card has been written by the Hugging Face team, and parts of it were copy pasted from the T5 model card.

Model Details

Model Description

- Model type: Language model

- Language(s) (NLP): English, Spanish, Japanese, Persian, Hindi, French, Chinese, Bengali, Gujarati, German, Telugu, Italian, Arabic, Polish, Tamil, Marathi, Malayalam, Oriya, Panjabi, Portuguese, Urdu, Galician, Hebrew, Korean, Catalan, Thai, Dutch, Indonesian, Vietnamese, Bulgarian, Filipino, Central Khmer, Lao, Turkish, Russian, Croatian, Swedish, Yoruba, Kurdish, Burmese, Malay, Czech, Finnish, Somali, Tagalog, Swahili, Sinhala, Kannada, Zhuang, Igbo, Xhosa, Romanian, Haitian, Estonian, Slovak, Lithuanian, Greek, Nepali, Assamese, Norwegian

- License: Apache 2.0

- Related Models: All FLAN-T5 Checkpoints

- Original Checkpoints: All Original FLAN-T5 Checkpoints

- Resources for more information:

- Downloads last month

- 1,383