base_model: google/paligemma-3b-ft-docvqa-896

library_name: peft

license: apache-2.0

datasets:

- cmarkea/doc-vqa

language:

- fr

- en

pipeline_tag: visual-question-answering

paligemma-3b-ft-docvqa-896-lora

paligemma-3b-ft-docvqa-896-lora is a fine-tuned version of the google/paligemma-3b-ft-docvqa-896 model, specifically trained on the doc-vqa dataset published by Crédit Mutuel Arkéa. Optimized using the LoRA (Low-Rank Adaptation) method, this model was designed to enhance performance while reducing the complexity of fine-tuning.

During training, particular attention was given to linguistic balance, with a focus on French. The model was exposed to a predominantly French context, with a 70% likelihood of interacting with French questions/answers for a given image. It operates exclusively in bfloat16 precision, optimizing computational resources. The entire training process took 3 week on a single A100 40GB.

Thanks to its multilingual specialization and emphasis on French, this model excels in francophone environments, while also performing well in English. It is especially suited for tasks that require the analysis and understanding of complex documents, such as extracting information from forms, invoices, reports, and other text-based documents in a visual question-answering context.

Model Details

Model Description

- Developed by: Loïc SOKOUDJOU SONAGU and Yoann SOLA

- Model type: Multi-modal model (image+text)

- Language(s) (NLP): French, English

- License: Apache 2.0

- Finetuned from model [optional]: google/paligemma-3b-ft-docvqa-896

Usage

Model usage is simple via transformers API

from transformers import AutoProcessor, PaliGemmaForConditionalGeneration

from PIL import Image

import requests

import torch

device = "cuda" if torch.cuda.is_available() else "cpu"

model_id = "cmarkea/paligemma-3b-ft-docvqa-896-lora"

url = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/transformers/tasks/car.jpg?download=true"

image = Image.open(requests.get(url, stream=True).raw)

model = PaliGemmaForConditionalGeneration.from_pretrained(

model_id,

torch_dtype=torch.bfloat16,

device_map=device,

).eval()

processor = AutoProcessor.from_pretrained("google/paligemma-3b-ft-docvqa-896")

# Instruct the model to create a caption in french

prompt = "caption fr"

model_inputs = processor(text=prompt, images=image, return_tensors="pt").to(model.device)

input_len = model_inputs["input_ids"].shape[-1]

with torch.inference_mode():

generation = model.generate(**model_inputs, max_new_tokens=100, do_sample=False)

generation = generation[0][input_len:]

decoded = processor.decode(generation, skip_special_tokens=True)

print(decoded)

Results

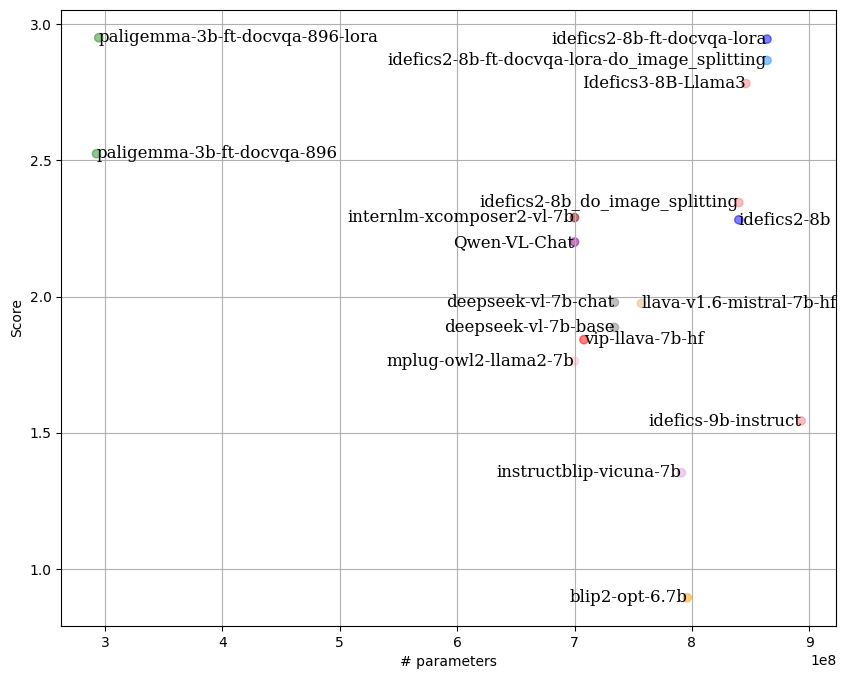

By following the LLM-as-Juries evaluation method, the following results were obtained using three judge models (GPT-4o, Gemini1.5 Pro and Claude 3.5-Sonnet). These models were evaluated based on the average of two criteria: response accuracy and completeness, similar to what the SSA metric aims to capture. This metric was adapted to the VQA context, with clear criteria for each score (0 to 5) to ensure the highest possible precision in meeting expectations.

Citation

@online{Depaligemma,

AUTHOR = {Loïc SOKOUDJOU SONAGU and Yoann SOLA},

URL = {https://huggingface.co/cmarkea/paligemma-3b-ft-docvqa-896-lora},

YEAR = {2024},

KEYWORDS = {Multimodal ; VQA},

}

Find the base model paper here.

- PEFT 0.11.1